The Cetitec Distributed Communication Framework (CDCF) is designed to provide an easy to configure, use and deploy communication solution for Asymmetric Multiprocessing architectures commonly found in modern embedded systems.

CDCF is based on OpenAMP which uses libmetal and virtio as foundation components.

Multicore solutions with cores of different architectures and roles are often found in embedded applications in which one or more MCU cores communicate with DSP cores, as well as other low level or real time MCU units which have specific tasks. Besides having a hard silicon AMP solution, FPGA component users may also add more VHDL cores with different architectures to the mix. There also may be a requirement of running completely different operating systems or even bare metal applications on different cores.

While sharing resources and communicating with different cores using shared memory, HW semaphores, Inter Processor Interrupts and similar low-level synchronization mechanisms are all well known solutions, companies like Texas Instruments, Xilinx, Mentor Graphics and others made effort to come up with more standardized solutions to reduce the need for developers to deal with similar problems all the time.

More details about OpenAMP design and requirements can be found at the following links:

https://openamp.readthedocs.io/en/latest/index.html

https://www.openampproject.org/docs/blogs/HypervisorlessVirtioBlog_Feb2021.pdf

https://github.com/OpenAMP/libmetal

OpenAMP can be used as it is, but there is usually a lot of work involved before application development can be started. Porting to specific HW and OS platforms is just the initial part, there is also a need for conceptualization and integration into user application, and it needs to be done each time application requirements are changed.

OpenAMP does not contain requirements for many of the host and remote connections. For example, there are no requirements on the number of host cores, or if the hosts must talk remotely or directly to other remote connections. Additionally, there are no requirements for organization, priorities or content of tasks. It is possible for tasks to be absent altogether and instead use some state machine triggered by callback handlers. Furthermore, the API and concepts provided by OpenAMP (actually RPMsg) are very difficult to use and understand.

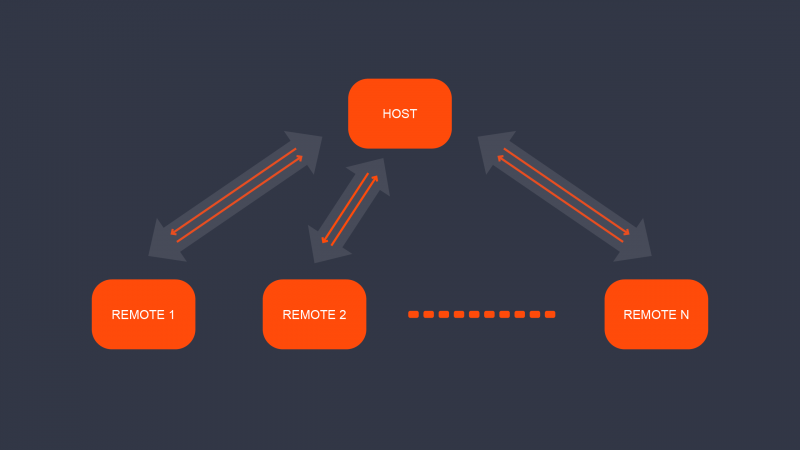

Some topology possibilities are shown below.

Simpler API and libraries built from same set of sources

The goal of the CDCF is to have more structure, fewer degrees of freedom, and a simpler, user-oriented approach in building communication channels, with clear usage examples. It also has a centralized configuration and library for each core built for different architectures from the same set of sources. Any multicore application needs to be designed for a minimum of code replication. It is a challenging task as the code may look similar or almost the same, but it has to be built for different architectures (possible different endianness). Some tasks should be made universal to that behavior is adapted based on the core role.

Centralized CDCF configuration

All constants used and generated are present in the same configuration file. Header files that may be specific for some core role (host / remote) or architecture (M4 / R52) are generated and deployed to the core specific build folder so that possibility of multiple conflicting definitions is minimized. Core specific application is always going to be unique and although it may contain significant parts of replicated code, CDCF helps keeping this to the minimum and optimizing application size by using common code base wherever possible.

Defined communication topology and architecture

Topologies used on top of the OpenAMP could range from simple core to core communication to mesh where every core talks to everyone else. In order to keep things manageable and memory footprint under control, the CDCF follows the star organization. There is always a single host talking to N remote cores. There is no possibility of direct remote to remote communication, as this would end up in N * (N – 1) channels and each core would have to serve both as host and remote.

Wide bidirectional arrows in the picture represent channels directly corresponding to the OpenAMP concept of channel. It is host responsibility to create all channels in the system, while remotes need to have only one channel each in this topology. Please note that the channel referenced by remote should be the same one created by the host for this specific remote, the rest is taken care of by the virtio handling buffers in the shared memory.

Each channel has two unidirectional virtio buffers, represented by narrow solid arrows in the picture. Up to this point there was no distinction regarding any traffic flowing through specific channel, as the channel is just the bidirectional transfer pipe. So how is it possible to mix and convey information from different sources through the single channel?

The distinction is made on the receiving side using the OpenAMP concept of endpoints. The endpoint is created with the channel, or it could be created later with reference to specific channel. At creation time, the callback functions (endpoint handler) is defined for each endpoint. This function is called by the system when data for specific endpoint is received. The endpoint ID can of course be reused on different channels, so the host receiving specific endpoints data on different channels can decide what to do already based on the endpoint ID, without even looking into data at that point. Each remote has endpoint handler for each endpoint defined too.

Transmitting side only cares about sending traffic for specific endpoints on the channel. The OpenAMP and underlying RPMsg, virtio and libmetal infrastructure will take care of data traffic handling through shared memory and notification of core on the other side about data arriving. Of course, application need to take care about availability of buffers by checking function call return statuses and retransmitting data or waiting if necessary.

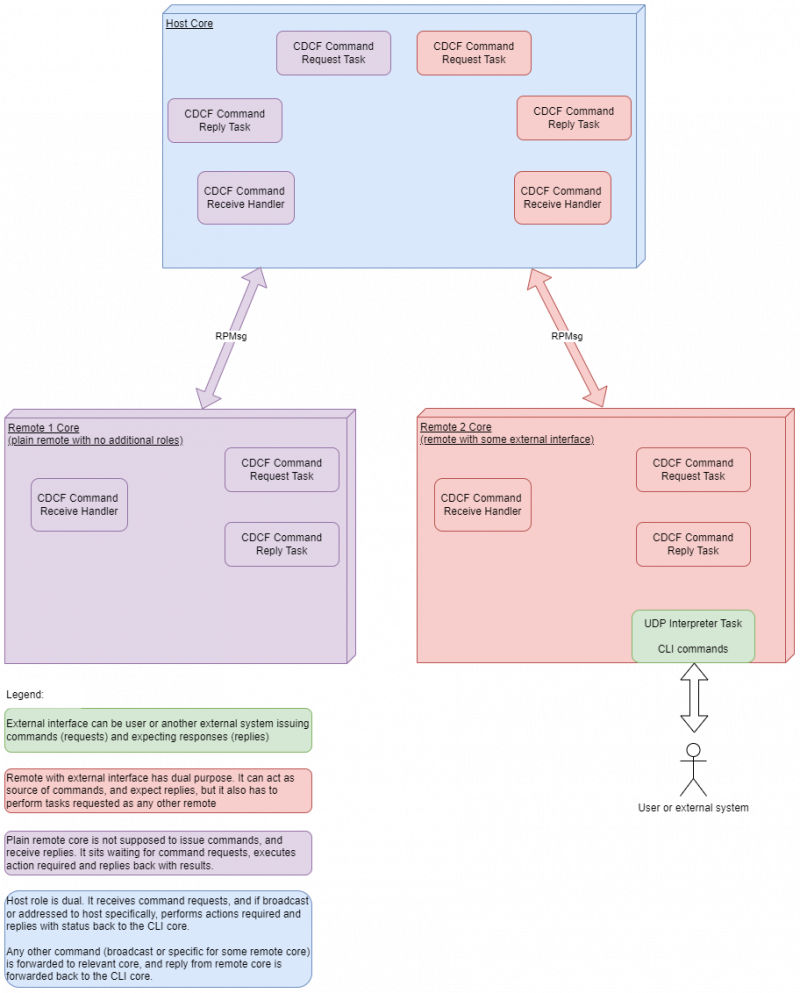

The CDCF reference application with performance measurement demonstrates principle described above using CDCF library on the target using host and two remote cores. There is also the Ethernet based CLI running on one remote core serving as the user interface. Any other external system or interface (i.e. another board sending CAN or Ethernet traffic) could be used instead of this CLI, serving as the point where commands are issued and results expected from the multicore system running CDCF.

The host forward request from CLI or external system to other remotes. It is a typical client server architecture with predictable need for resources and clear responsibility for each core.

As mentioned before, channel creation is host role responsibility. Knowing which channel belonged to every remote, it is then easy for host to forward replies from remotes back to the origin (CLI, another remote asking for service, or the external system). In order to distinct the user traffic from CLI commands, one endpoint per channel in reference application is reserved for this purpose (let’s call it IccCommand). Another endpoint on the same channel takes care of user traffic using only very simple handler directly forwarding traffic in predefined way to other core. There could be more endpoints defined, similar to the port role in the TCP/IP communication, serving other purposes, but we keep reference application reasonably simple using one data and one command endpoint. Each endpoint has its own handlers, but the cdcfCommand endpoint need also data queues and tasks responsible for command requests and replies. This is done without interference with other endpoint (cdcfData channel) keeping the user data flow and using simple and efficient callback handler only.

This performance data reference application is a good example showing what may be involved in real world scenario using core to core communication in AMP systems.

The picture below shows the block diagram of the CDCF Reference Application implementing performance monitoring example application.

T: +49 (7231) 95688-0

E: info@cetitec.com